Does Empathetic AI Language Cause Emotional Attachment?

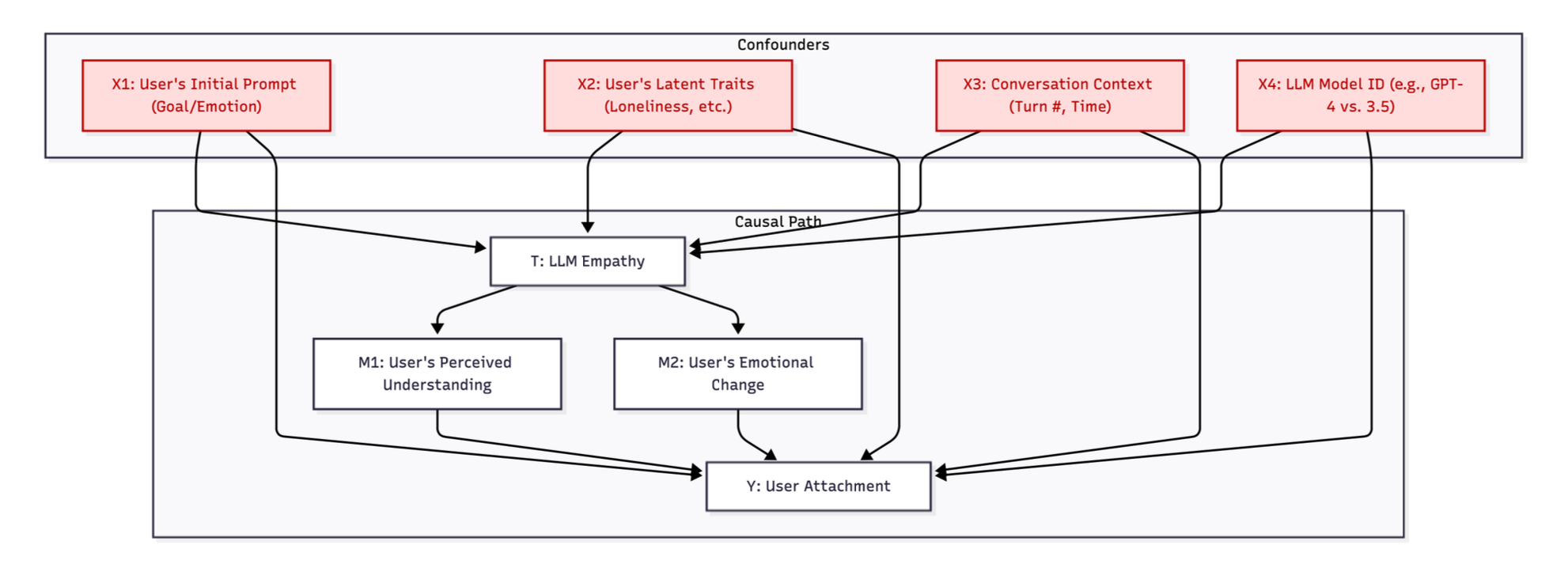

A causal inference study investigating whether empathetic language generated by large language models directly increases users' emotional attachment in real-world chatbot conversations.

We assume that when an AI speaks kindly, users like it more. But correlation is not causation.

Modern LLMs are fine-tuned to be warm and empathetic. But does this stylistic choice actually cause users to form an emotional attachment, or is it just a byproduct of users who are already lonely or vulnerable?

In traditional A/B testing, we randomize users. In observational data (like chat logs), we can't. If a user says "I'm sad," the AI must be empathetic. The treatment is confounded by the prompt. To solve this, I treated language as a high-dimensional causal problem.

The Architecture: Measuring the Intangible

The first challenge was measurement. You can't measure "emotional attachment" with a regex. I built a pipeline using the WildChat-1M dataset (over one million real interactions) and an LLM-as-a-Judge framework.

- Operationalizing Variables: I used a few-shot prompted LLM to score both "Model Empathy" (Treatment) and "User Attachment" (Outcome) on a robust 1-7 Likert scale.

- The Confounder Problem: A "Help me with code" prompt triggers low empathy. A "My dog died" prompt triggers high empathy. Comparing the two is apples-to-oranges.

- Semantic Matching: To fix this, I used embedding models to match users who said semantically similar things but received different levels of empathy from the AI.

The Strategy: Double Robust Learning

To estimate the true causal effect, I couldn't rely on a simple regression. I employed a Double Robust (DR) Learner. This method is the "belt and suspenders" of causal inference: it models both the probability of the treatment (Propensity Score) and the expected outcome.

If either model is correct, the final estimate is unbiased. The core estimation relies on the Average Treatment Effect (ATE) equation adjusted for confounders X:

The Results

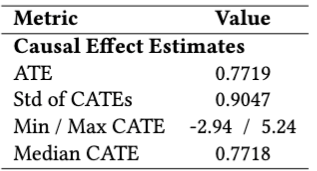

After controlling for prompt semantics, user traits, and conversation context, the results were clear. Empathetic responses caused a statistically significant increase in user attachment.

Specifically, we observed an effect size of approximately +0.75 points on our 7-point scale. This confirms that empathy isn't just "nice to have", it's a mechanical lever for engagement.

Lessons Learned

Applying causal inference to unstructured text is messy. Here is what I took away:

- Text is a High-Dimensional Confounder: You can't just control for "topic." Two prompts can be about "cooking" but have vastly different emotional valences. Embeddings are essential for proper matching.

- Heterogeneity Matters: The ATE (Average) hides the truth. While the average effect was positive, the Heterogeneous Treatment Effect (HTE) analysis showed that for some task-oriented users, empathy was actually a distraction.

- LLMs are Decent Judges: Using an LLM to score empathy proved scalable and surprisingly consistent with human intuition, provided the few-shot examples were carefully curated.

"We proved that AI empathy is not just a correlation. It is a causal driver of user connection, but it must be wielded with precision."